Nov 1st, ‘21/4 min read

Sleep Friendly Alerting

We've all been woken up with that dreaded Slack notification at ungodly hours only to realise that the alert was all smoke and no fire. The perfect recipe for dread and alert fatigue.

If you are a Site Reliability Engineer (SRE), we are sure you have that one email/channel where you are continuously bombarded with multiple alert notifications and unread notifications are in the thousands and millions! However, due to FOMOA (fear of missing out an alert 😅), you dare NOT snooze this channel. “What if I miss that one important alert?” you think.

And we can all agree that this approach is not feasible - alerts do get missed, things break and 3am war rooms are not uncommon!

Today, therefore, we want to talk about a common aspect of an SRE’s life - ALERT FATIGUE. The term, also known as alarm fatigue or pager fatigue, refers to the situation wherein one is exposed to numerous, frequent alarms, consequently making them desensitized to it.

In simpler words, alert fatigue happens due to the overwhelming number of alerts received. Due to the high alert exposure over a long period, naturally, the engineer pays less attention. This ignorance can lead to delayed responses, missed critical warnings, or even system failures. Reminds us of the famous “The Boy who cried wolf” story - your alerting application being the boy who continuously screams about the “wolf” attacking your system.

Also, interesting to note that this is not just a common occurrence in the software world - several fatal incidents have happened in the medical and aviation industry due to alert fatigue (beyond the topic of this article but one interesting example here if you are interested).

A Day in the Life of an SRE

With the emergence of cloud and digital transformation, monitoring metrics and alerting notifications have risen tenfold. System alerts, deployment mails, tickets, logging, codebase popups, environment bug alarms, etc., everything tends to shift your focus exponentially. Moreover, if you’re scrolling your messages or social media on your phone, things might stretch your multitasking mind a little more.

Here comes the urge to turn off or stay numb to the low-priority alerts like regular system updates or repetitive deployment mails. These can be further categorized as non-critical alerts with no call-to-action required. But let’s imagine a day when there is some major system blocker because of a security breach.

Understandably so, turning off the alerts altogether or posing an ignorant approach makes the whole idea of alerting mails meaningless. The burnout feeling coming from tons of notification sounds from various apps like Teams, Slack, Skype, Outlook, etc., gradually habituates your eardrums. And it is perfectly natural to switch off the noise or purposely ignore them once in a while.

But there has to be a balance between silencing alerts and not missing the potentially critical ones. On the non-work, a good solution has been Apple’s new iOS update that allows you to focus by sending fewer alerts during your focus time. It also notifies your contacts about the same when they try to reach you. If you want to avoid missing your favorite social media notification throughout the day - tweak the settings.

On similar lines, regular infrastructure monitoring and threshold tweaks are necessary for creating the right amount of “noise” - the perfect balance of silence and noise that lets you stay productive and is legitimate.

How to have a Perfect Balance of Alerts

We already know that DevOps engineers and SREs are wearing multiple hats and are exposed to alerts and context switching 24*7. Even acknowledging an open ticket, taking as little as a few seconds, might dramatically shift your focus and affect your productivity rate.

Low-priority alerts can be duplicate, irrelevant, or correlated. There is, therefore, a need to intelligently segregate and monitor the actual alerts over false positives or negatives. Additionally, fusing correlated alerts as event diagnostics instead of sending over monotonous emails can avoid flooding your inbox. While it is often a good practice for the user to define rules and workflows to reduce the mail clutter or message pings, our existing tools definitely have room for improvement and should do better.

Essentially, alerts should have the following features:

- Be as limited in quantity as possible - you don’t need multiple alerts for the same failure point

- Be actionable - an intelligent alert that just doesn’t state the problem, but gives additional information (including but not limited to cascading impact of alert, possible resolution, quick link to dashboard for additional details etc.)

- Be directed to the right person - an alert should be categorized to alert the relevant point of contact and not add fatigue to the entire team.

- Be sent in advance of failure - the most effective alert is one that comes in advance of the failure , giving teams time to fix before S**T hits the fan!

Over and above the mentioned features, we have also noticed that engineers tend to stress out even when the number of alerts are less. The silence leads to the overthinking mind go in a spiral - “is the system not breaking or is the alerting system itself broken?”

The first step therefore is to trust your system, alerting tool and the workflows that you have set up. The next step of course is to choose the right tool and only then can you go ahead and enjoy a good night’s sleep without the 3am interruptions!

How can Last9 help?

Last9’s SLO Manager tool allows you to seamlessly set and manage SLOs for your services. It’s a simple 3 step process:

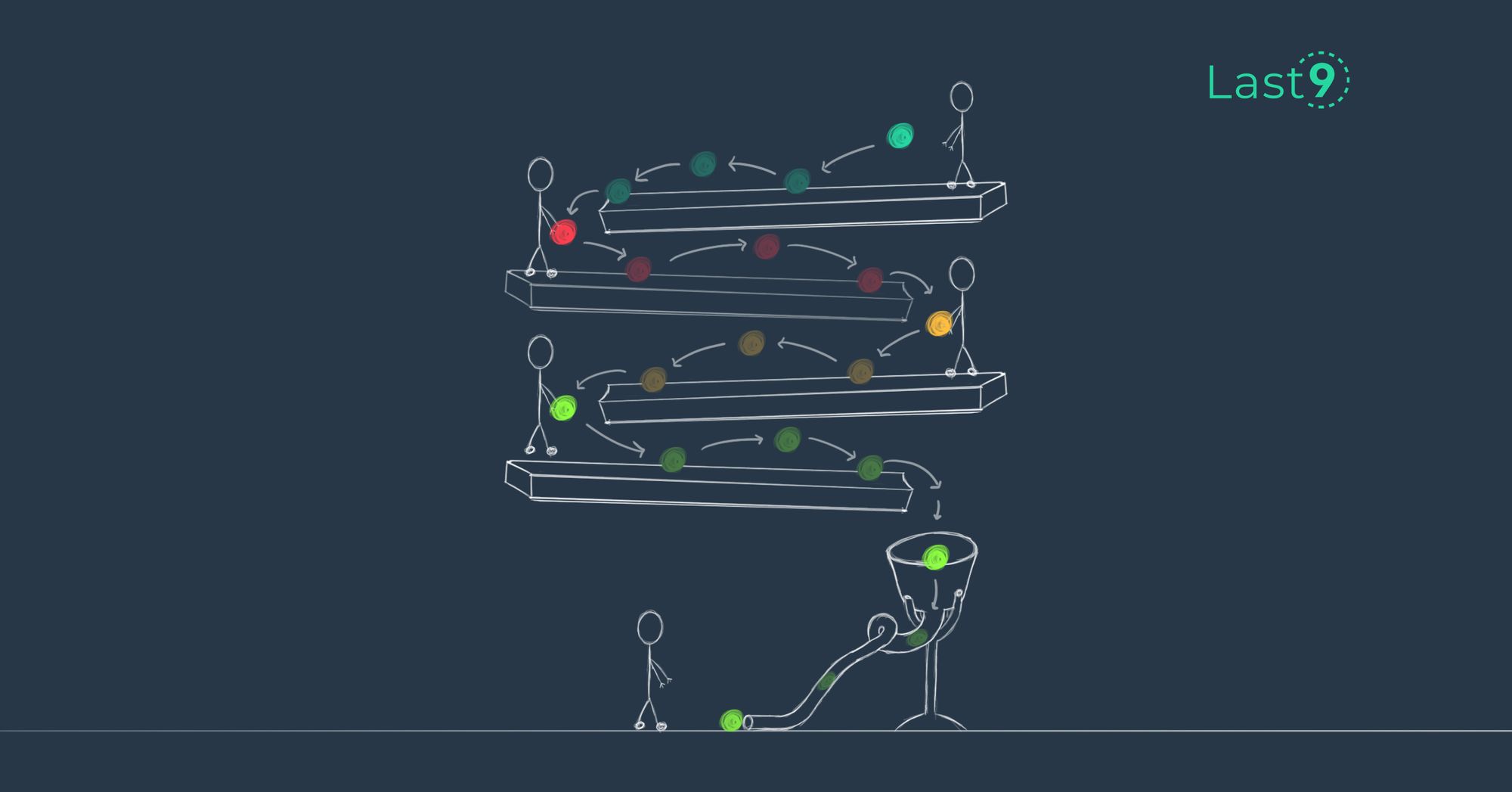

Source metrics from the multiple silos where your data lives → define your Service Level Indicators and Objectives → connect through a webhook with your favourite alerting system (Slack, Pagerduty, Opsgenie, email etc.).

What you see going forward is the most manageable system of alerts that you have ever experienced. We (cheekily) call it sleep-friendly-alerting: primarily because it grew out of our own pain point of those midnight interruptions and fires!

Here’s how it works:

- We understand what is “normal” for your system: Last9 analyzes your historical metrics to understand expected levels of important system metrics (throughput, availability, latency etc.)

- Notice when “normal” is about to break: Before an outage reaches its peak, we are able to analyze that your system’s normal levels are changing and that things may break. This allows us to send you alerts of a service “under threat”

- Send intelligent alerts that are actionable: Our alerts now know when your system is about to break. However, we go one step further and tell you the potential cascading effects of a particular failure and point you instantly to the breaking SLO and service.

But let's finally accept one thing before you leave. Your systems will go wrong. Surprising side effects will emerge. You want to find them as fast and early as possible. And if you are the on-call engineer at 3 AM and see an alert from Last9, you can avoid cursing us and be absolutely sure that it’s not just a “screamed wolf”!

Contents

Newsletter

Stay updated on the latest from Last9.

Handcrafted Related Posts

OpenTelemetry vs. OpenCensus

What are OpenTelemetry, and OpenCensus and how to migrate from OpenCensus to OpenTelemetry

Last9

Sleep Friendly Alerting

We've all been woken up with that dreaded Slack notification at ungodly hours only to realise that the alert was all smoke and no fire. The perfect recipe for dread and alert fatigue.

Akshat Goyal

The importance of structured communication in the world of SRE

How you communicate helps build your 9s. In the world of Site Reliability Engineering, this is crucial. How do you do it?

Saurabh Hirani